Stories from the Heart of St Chris

School news, thought pieces, and developments at St Chris.

St Chris is a leading progressive independent school based in Letchworth Garden City, Hertfordshire.

Our student community, comprising young people aged between 3-18, take part in a truly holistic curriculum supported by residential and local school trips and personal development. Read about our students’ recent endeavours on this news page, filter by school, explore popular articles, and search for news.

Filter By:

-

Fri, 09 May 2025

The Roundup - Edition 70

-

Fri, 09 May 2025

The Roundup - Edition 69

-

Fri, 09 May 2025

The Roundup - Edition 68

-

Fri, 09 May 2025

The Roundup - Edition 67

-

Fri, 09 May 2025

The Roundup - Edition 66

-

Fri, 09 May 2025

The Roundup - Edition 65

-

Fri, 09 May 2025

The Roundup - Edition 64

-

Fri, 02 May 2025

The Roundup - Edition 63

-

Fri, 25 Apr 2025

The Roundup - Edition 62

-

Fri, 04 Apr 2025

The Roundup - Edition 61

-

Fri, 28 Mar 2025

The Roundup - Edition 60

-

Fri, 21 Mar 2025

The Roundup - Edition 59

-

Fri, 07 Mar 2025

The Roundup - Edition 58

-

Fri, 07 Mar 2025

The Roundup - Edition 57

-

Fri, 07 Mar 2025

Literary Festival

-

Fri, 28 Feb 2025

The Roundup - Edition 56

-

Wed, 26 Feb 2025

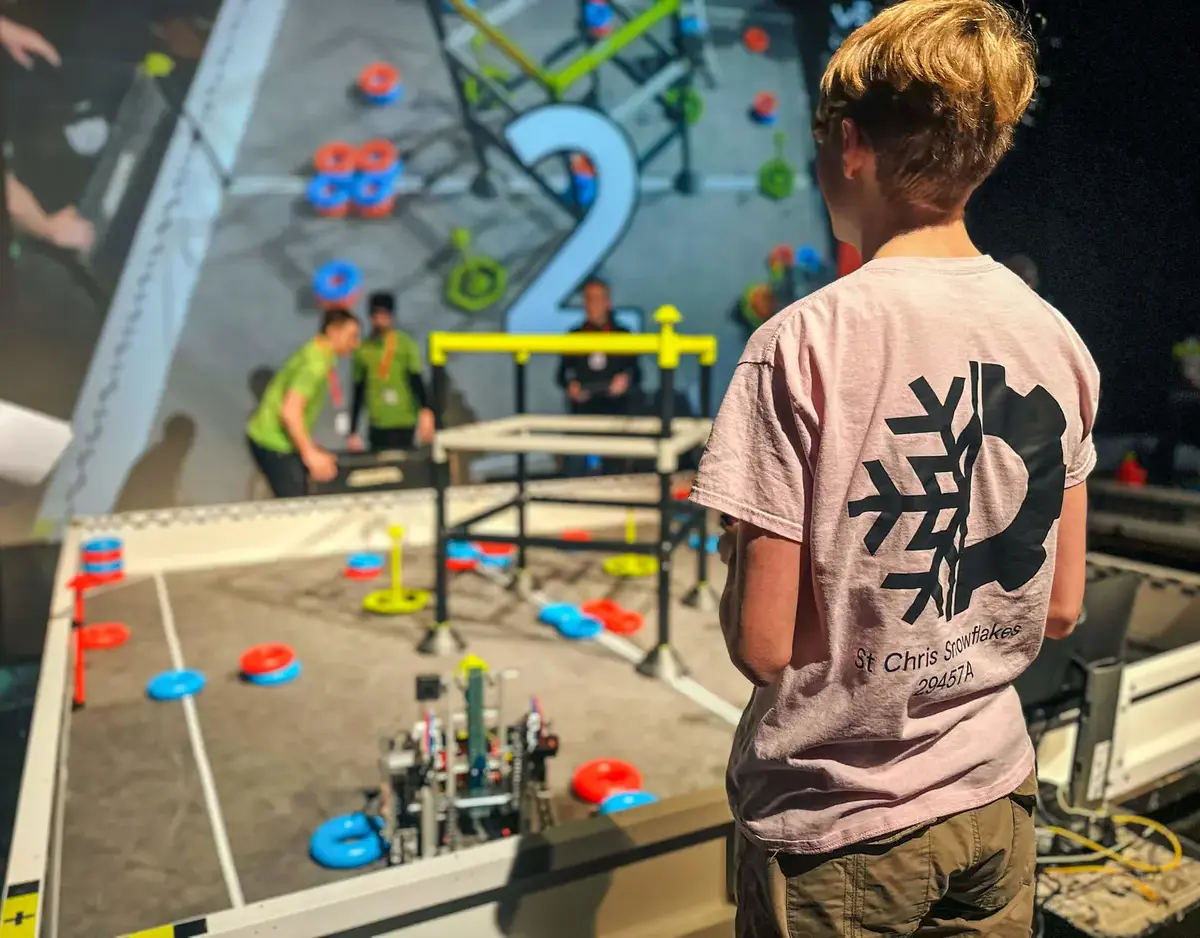

St Chris Host Robotics Tournament

-

Fri, 14 Feb 2025

The Roundup - Edition 55

-

Fri, 07 Feb 2025

The Roundup - Edition 54

-

Fri, 31 Jan 2025

The Roundup - Edition 53

-

Fri, 31 Jan 2025

Meet Alison Burrows

-

Tue, 28 Jan 2025

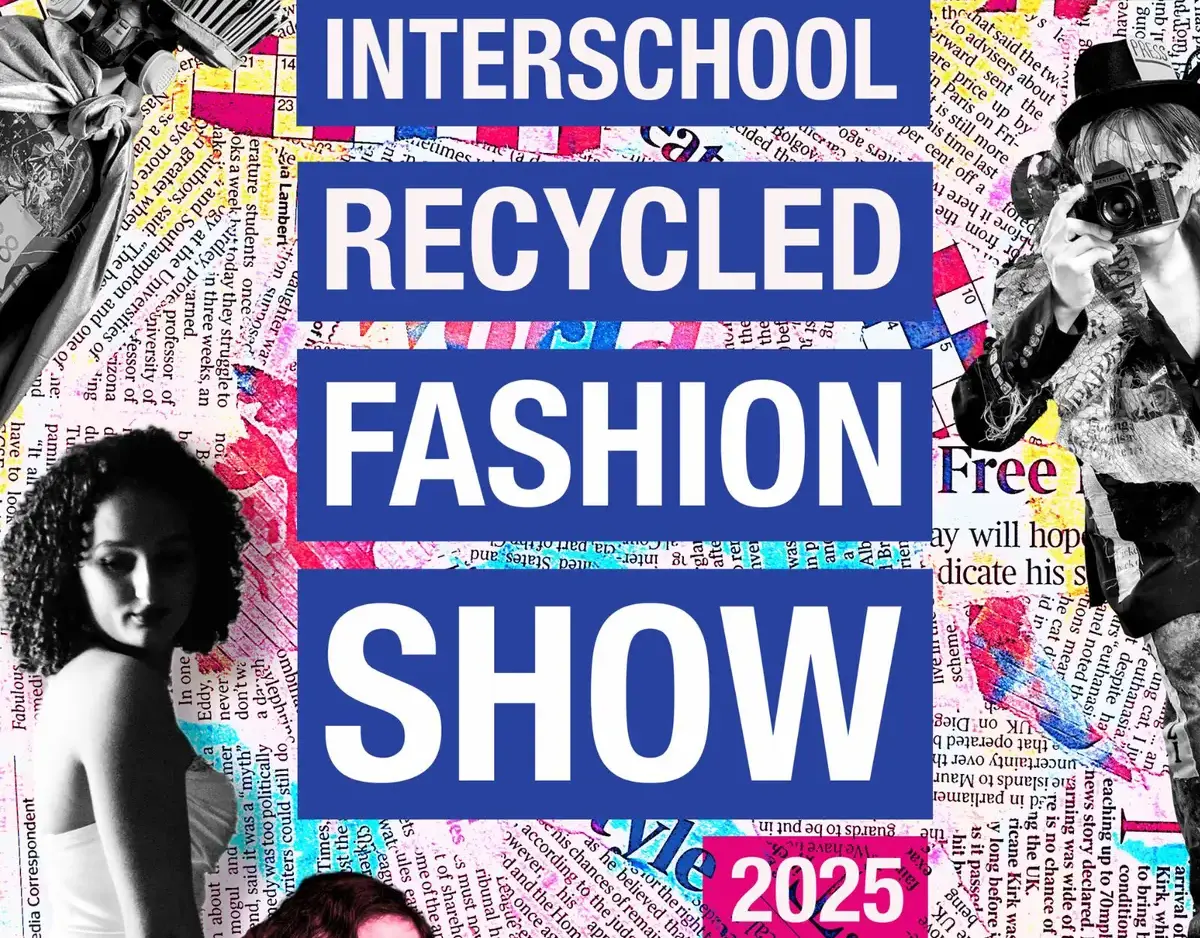

Recycled Fashion Show

-

Fri, 24 Jan 2025

The Roundup - Edition 52

-

Fri, 24 Jan 2025

Meet Pam Sunner

-

Fri, 17 Jan 2025

Meet Ann-Marie Knight

-

Fri, 17 Jan 2025

The Roundup - Edition 51

-

Fri, 10 Jan 2025

The Roundup - Edition 50

-

Fri, 10 Jan 2025

Meet Kirsty Baker

-

Thu, 12 Dec 2024

Alfie Watts Visits St Chris

-

Thu, 12 Dec 2024

Meet Connor Vincent

-

Fri, 06 Dec 2024

Sixth Form at St Chris

-

Fri, 06 Dec 2024

The Roundup - Edition 49

-

Fri, 06 Dec 2024

The Roundup - Edition 48

-

Fri, 06 Dec 2024

Meet Amy Anderson

-

Fri, 29 Nov 2024

The Roundup - Edition 47

-

Fri, 29 Nov 2024

Meet Liv Pastor

-

Fri, 22 Nov 2024

The Roundup - Edition 46

-

Fri, 22 Nov 2024

Meet Andrew Lambie

-

Fri, 15 Nov 2024

The Roundup - Edition 45

-

Fri, 08 Nov 2024

Meet Denise Robinson

-

Fri, 08 Nov 2024

The Roundup - Edition 44

-

Fri, 18 Oct 2024

The Roundup - Edition 43

-

Thu, 17 Oct 2024

Meet Sarah Armstrong

-

Fri, 11 Oct 2024

Meet Kelly Wailes

-

Fri, 11 Oct 2024

The Roundup - Edition 42

-

Fri, 04 Oct 2024

Solutions Not Sides

-

Fri, 04 Oct 2024

The Roundup - Edition 41

-

Fri, 27 Sep 2024

The Roundup - Edition 40

-

Thu, 26 Sep 2024

Meet Amelia Turvey

-

Thu, 26 Sep 2024

Apple Day

-

Fri, 20 Sep 2024

The Roundup - Edition 39

-

Fri, 20 Sep 2024

Students vs Alumni Football Tournament

-

Fri, 20 Sep 2024

Meet Angela Phillips

-

Thu, 19 Sep 2024

Meet Scarlett

-

Fri, 13 Sep 2024

The Roundup - Edition 38

-

Fri, 13 Sep 2024

Meet Lucy Pinkstone

-

Fri, 06 Sep 2024

The Roundup - Edition 37

-

Fri, 06 Sep 2024

Meet Kishon Mather

-

Fri, 26 Jan 2024

Here we Come a Wassailing

-

Fri, 12 Jan 2024

Is AI a force for good or evil?

-

Thu, 30 Nov 2023

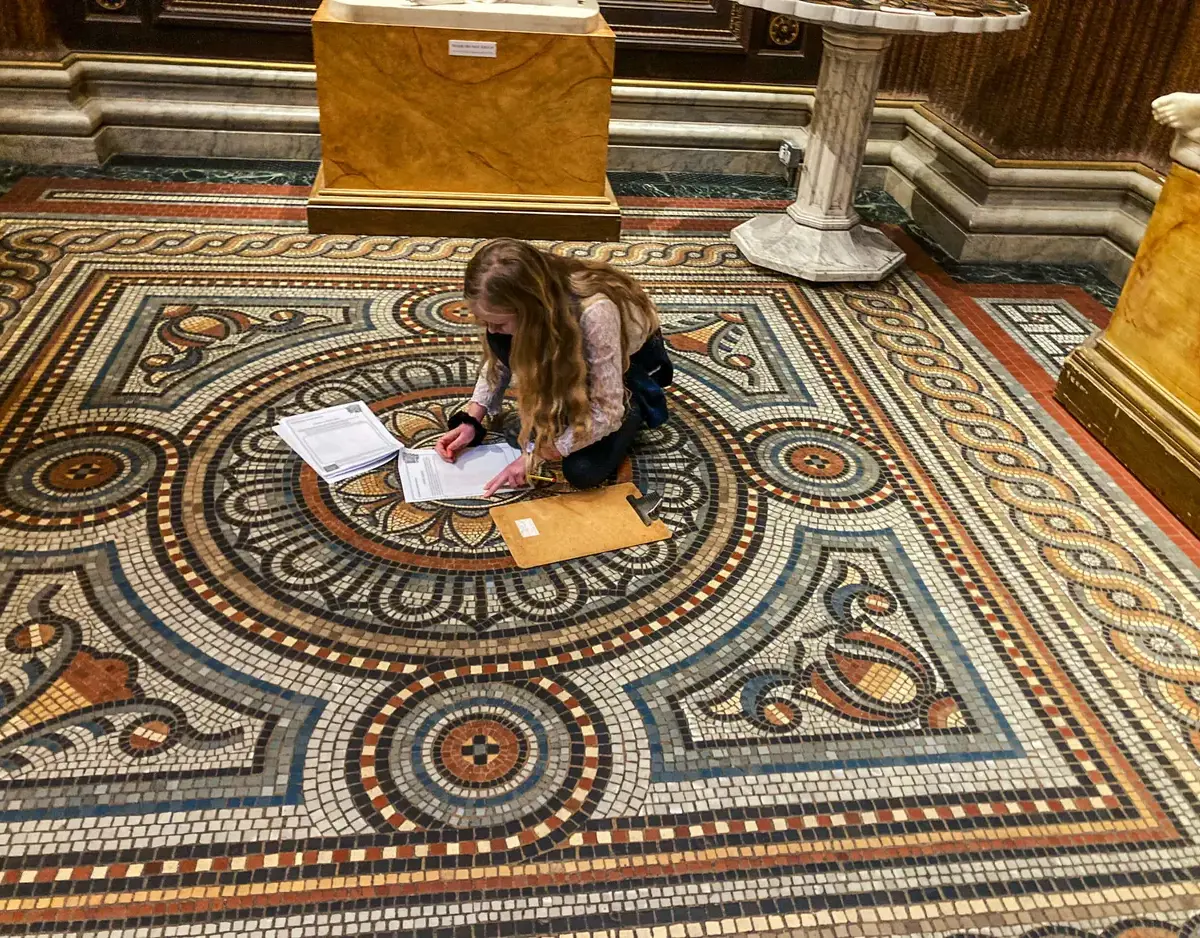

British Schools Museum

-

Wed, 29 Nov 2023

No Pens Day

-

Thu, 16 Nov 2023

Faith Week 2023

-

Wed, 08 Nov 2023

Stay and Play: Forest School

-

Tue, 24 Oct 2023

Term of Educational Excellence

-

Thu, 05 Oct 2023

Apple Fest at St Chris

-

Thu, 24 Aug 2023

GCSE Results Day 2023

-

Tue, 22 Aug 2023

Trip to Morzine

-

Thu, 17 Aug 2023

A Level Results Day

-

Wed, 05 Jul 2023

Zahra’s Work Experience Week